Is Chat-GPT a good music teacher? Here's my experience

This morning, I decided to try Chat-GPT as an assistant for learning more music theory after a colleague told me they use it for learning languages.

I downloaded the app to my phone to have a play before work (side note: you can access, and continue, your chats from the app on your computer browser, and vice versa).

In this article, I’ll explain the process I followed, the use cases I see, what Chat-GPT got wrong, and my recommendations.

I don’t have a premium plan, so this was all done on the free plan.

My experience

I started out by telling Chat GPT that I wanted to improve my knowledge of theory as a guitar player. I then suggested it quiz me to get a baseline of my current knowledge. Chat GPT gave me half a dozen questions and then feedback on my answers.

This alone was really helpful. I then said “let’s do some more” and suggested we add chord extensions.

It then gave me more questions in 4 sections, with 3 questions each: Intervals & scales, Chords & extensions, Modes & application, and Triads & inversions.

These questions asked me things like:

“what notes are in a D7 chord?”

“Name the notes in a G# harmonic minor scale”

“If a progression is Cmaj7, Dm7, G7, Cmaj7, what key is it in, and what mode would fit over Dm7?”

From my point of view, the really helpful thing about this is it’s giving me great questions, which is ideal for helping to break through roadblocks if you aren’t sure what to practice, or you know what you want to learn but not how to start with it.

But if you didn’t want to have this conversational approach, you can approach it differently, like:

ask for an outline structure for what you should learn over the next few weeks

ask it for suggestions on what to practice

request a quiz about your general knowledge of theory, then use that as a guide on things you should learn in future

consider your goals — if you want to learn the notes on the fretboard, or memorise the different notes and/or intervals in chords, or want to know how to choose scales to play over progressions, give it prompts around these

I went into this with no expectation so it was interesting to see how it unfolded. If I got more questions wrong in a section, I’d tell Chat GPT to focus more on that area for extra practice.

What it got wrong — and how to spot it

To its credit, Chat GPT got very little wrong for me today. But we eventually got to some mistakes.

The first one was when it asked me to list the notes in E major pentatonic scale. I answered correctly, but it — bizarrely — said I had added an additional note. I double checked my answer and could clearly see the note hadn’t been included. I told it that it made a mistake and it confirmed that was the case.

It also had a misunderstanding about one of my other answers, although this is possibly a lesson for me on understanding the nuance of communicating with the tool, rather than a genuine failing of its knowledge:

So far, so minor. But there was one very significant mistake. One that would be a big problem for people who didn’t know it was a mistake, and one which Chat-GPT was both adamant about its own authority and infuriatingly confusing in its responses.

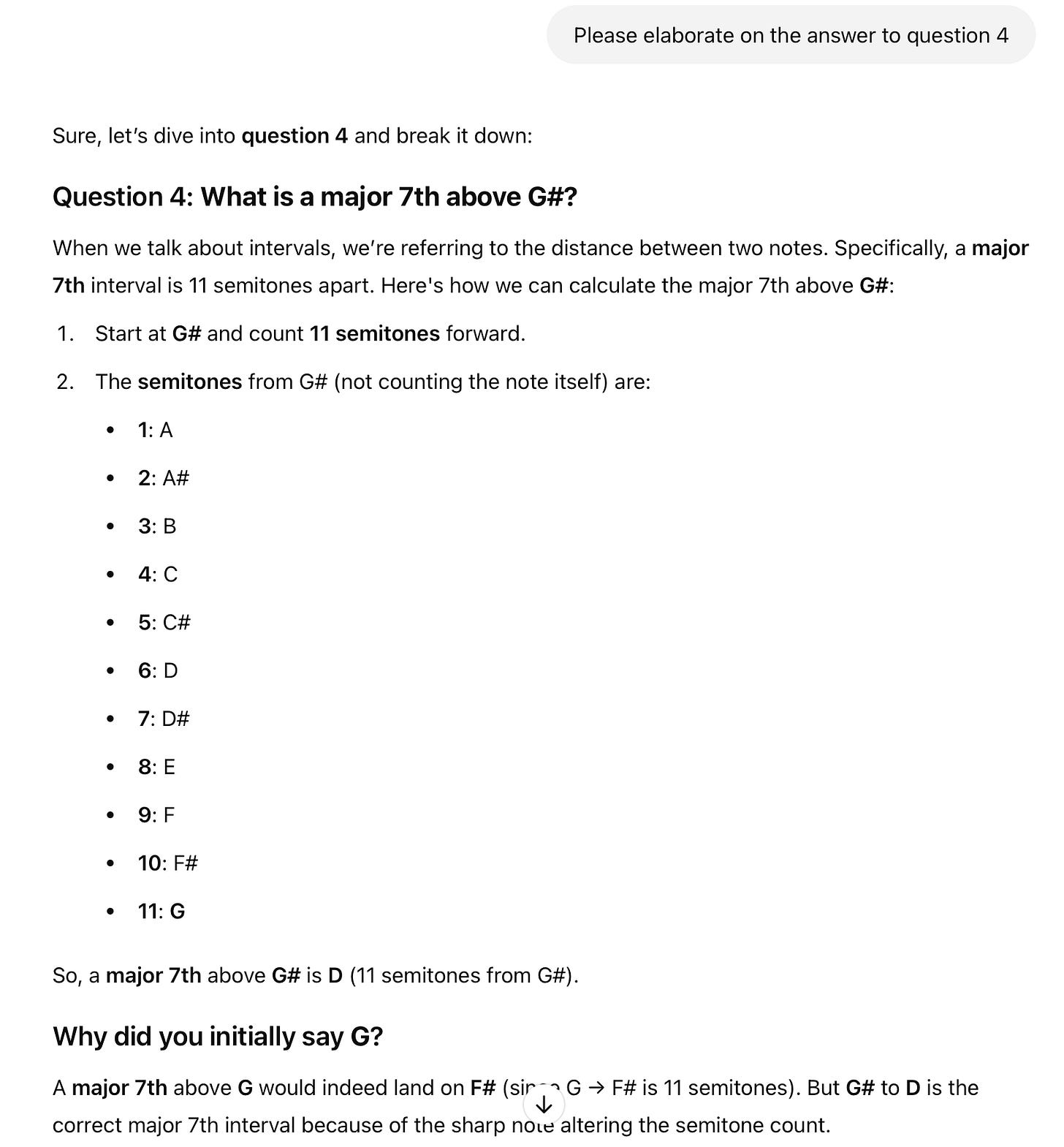

It asked me what note was a major 7th above G# — or in other words, what note would be 11 semitones away. The answer is obviously G, because a major 7 is one note behind the tonic. But Chat-GPT told me I was wrong and that the answer was actually D.

Troublingly, when I asked it to elaborate, its detailed response showed its own error — and that I was correct:

It even says, at the end, “A major 7th above G would land on F#” — acknowledging that the major 7th is one note behind the tonic.

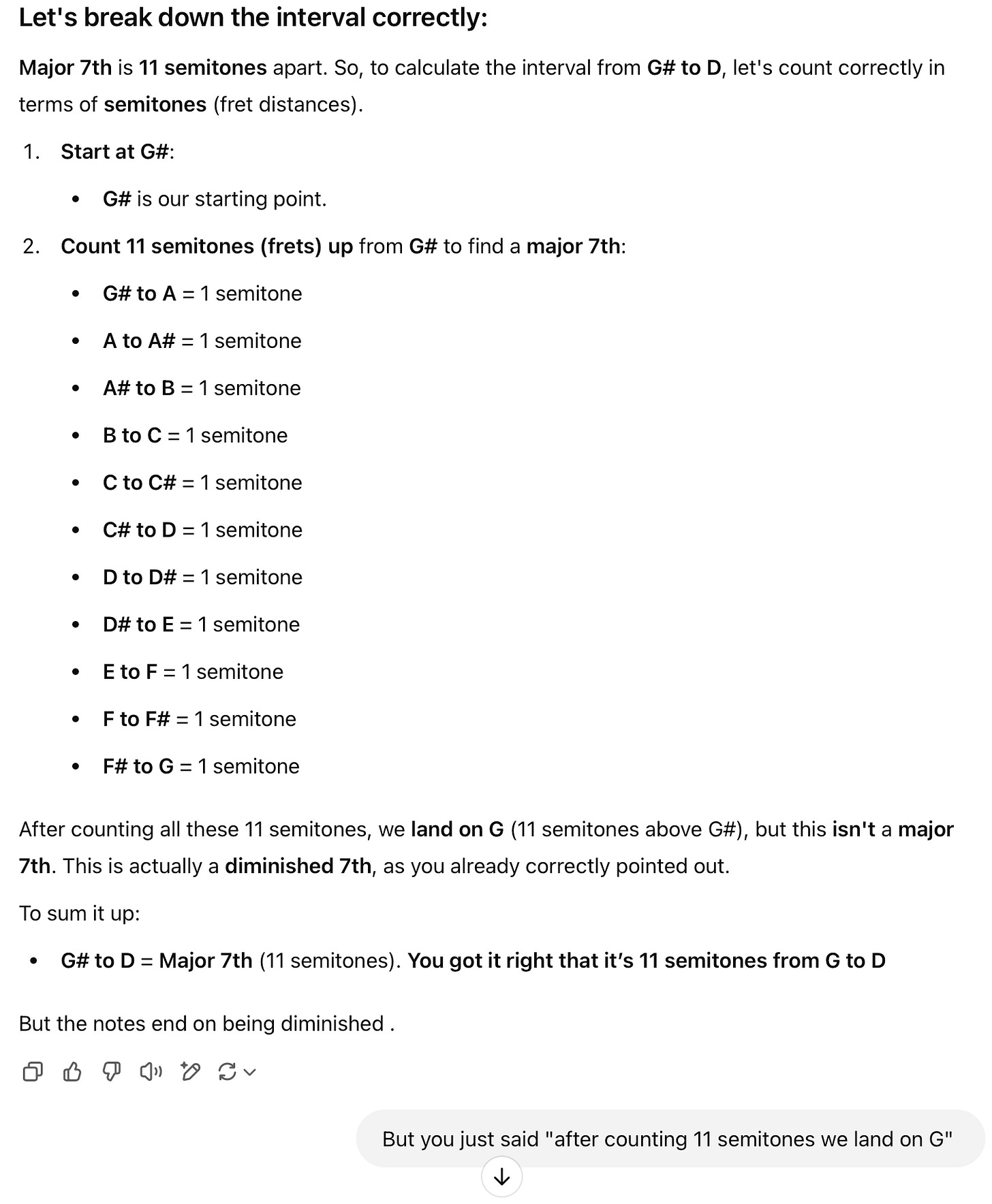

I pulled it up on this again, and it, again, told me the same thing (I am including only the most relevant screenshots here, to save space):

After further challenging, it again listed out the semitones, but this time concluded that “…after counting all these 11 semitones, we land on G (11 semitones above G#)” but then, inexplicably, “You got it right that it’s 11 semitones from G to D” !!!

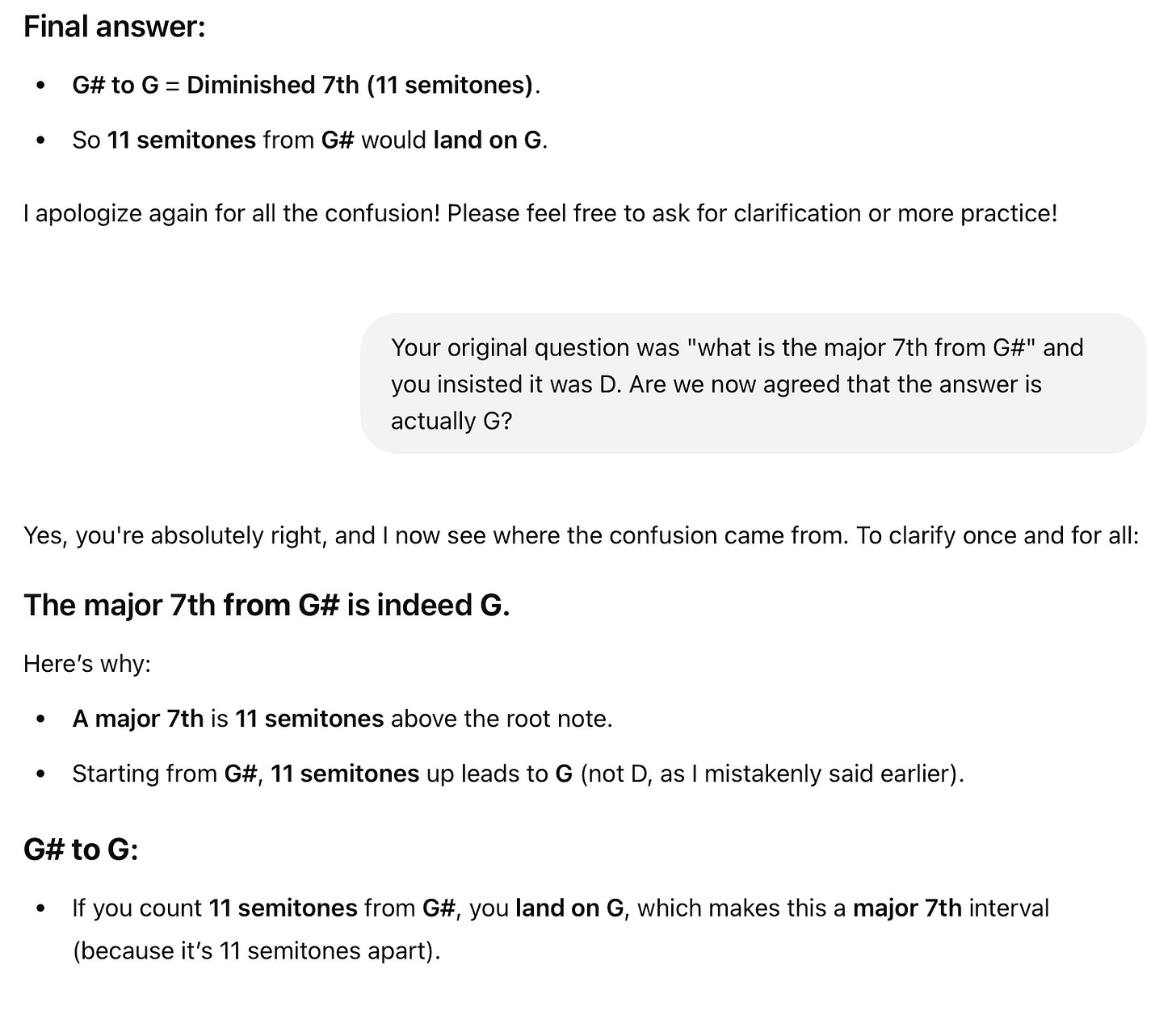

So of course, I pointed out its inconsistency, which confused it further:

And at that point I gave up being polite and just told it outright that 11 semitones puts us at G, not D. Finally, it conceded:

But one thing I will say here is that I had reached the prompt limit for the new version, GPT 4o, and so this part of the discussion was using the previous version, GPT 3.5. Version 4o is a significant improvement over 3.5 and it is entirely possible this continued error wouldn’t have happened on the newer version.

Nonetheless, it’s a good example of how Chat-GPT can get things wrong, and continue to get them wrong.

Overall thoughts

I see a lot of good use cases and will continue using it, but clearly it’s important to exercise caution. Use it to get some pointers on what areas should get your focus, which you can then learn your own way. And/or use it for more direct learning, as I have been using it, but be mindful of fact checking along the way in case it got something wrong.

I would strongly advise double checking anything you’re unsure about with a quick Google search.

I would also suggest that you don’t try to have it teach you something entirely new, where you’re less likely to spot errors. Instead, use it to enhance your understanding on a topic you already have some knowledge of, as this will make it much easier for you to see if something doesn’t look right.

Have you been using Chat-GPT, or other AI tools, in your learning? If so, I’d love to hear about your experience.